Why I'm more worried about AI safety now than 6 months ago

Exponentials are all you need

Over the last year, I’ve immersed myself in the world of AI safety, culminating in the whitepaper available here. In the whitepaper, we analyze a range of scenarios for how NeuroAI could inform safer AI, leveraging existing and future neurotechnologies to understand natural intelligence and solve critical technical safety problems.

AI safety is forward-looking: we’re trying to solve concrete problems that we analogize will be relevant to future, more capable AI systems. However, a lot of the hypothetical dangers and predictions have become far less hypothetical in the last few months: they’re clearly visible. If anything, I’ve become more worried about AI safety over the last 6 months.

In this long read, I summarize the last 6 months of AI progress; introduce a taxonomy for AI risks; give concrete examples of each of these risks that have been recently highlighted; and end on a hopeful note that NeuroAI could bend the curve.

A look back at 6 months of AI development

TL;DR: AI development is not hitting a wall.

AI works much better now than 6 months ago. We had just heard of o1 in September, but now reasoning models are ubiquitous, including o1, o3, DeepSeek R1 and Claude 3.7. There was a case to be made that straightforward scaling of large language models was about to hit a wall. The later disappointing release of GPT-4.5 was, for some, a confirmation that more tricks were needed to scale up models to something that resembles AGI. Reasoning models bend the curve, performing far better on math and coding tasks, where verification is straightforward.

Furthermore, competitive pressures have made models far cheaper, and algorithmic improvements are giving us the same or better performance as previous generation models for a fraction of the cost. OpenAI’s Deep Research was unveiled, and it was one of the first times I felt genuine awe at a model’s output. That sheen has worn off since then—you realize the jagged edge of model performance the more you use it, and its reports can be naive, verbose, and off the mark—but it was still a milestone: something that would do work equivalent to what a smart novice, high on enthusiasm, could do in a few days.

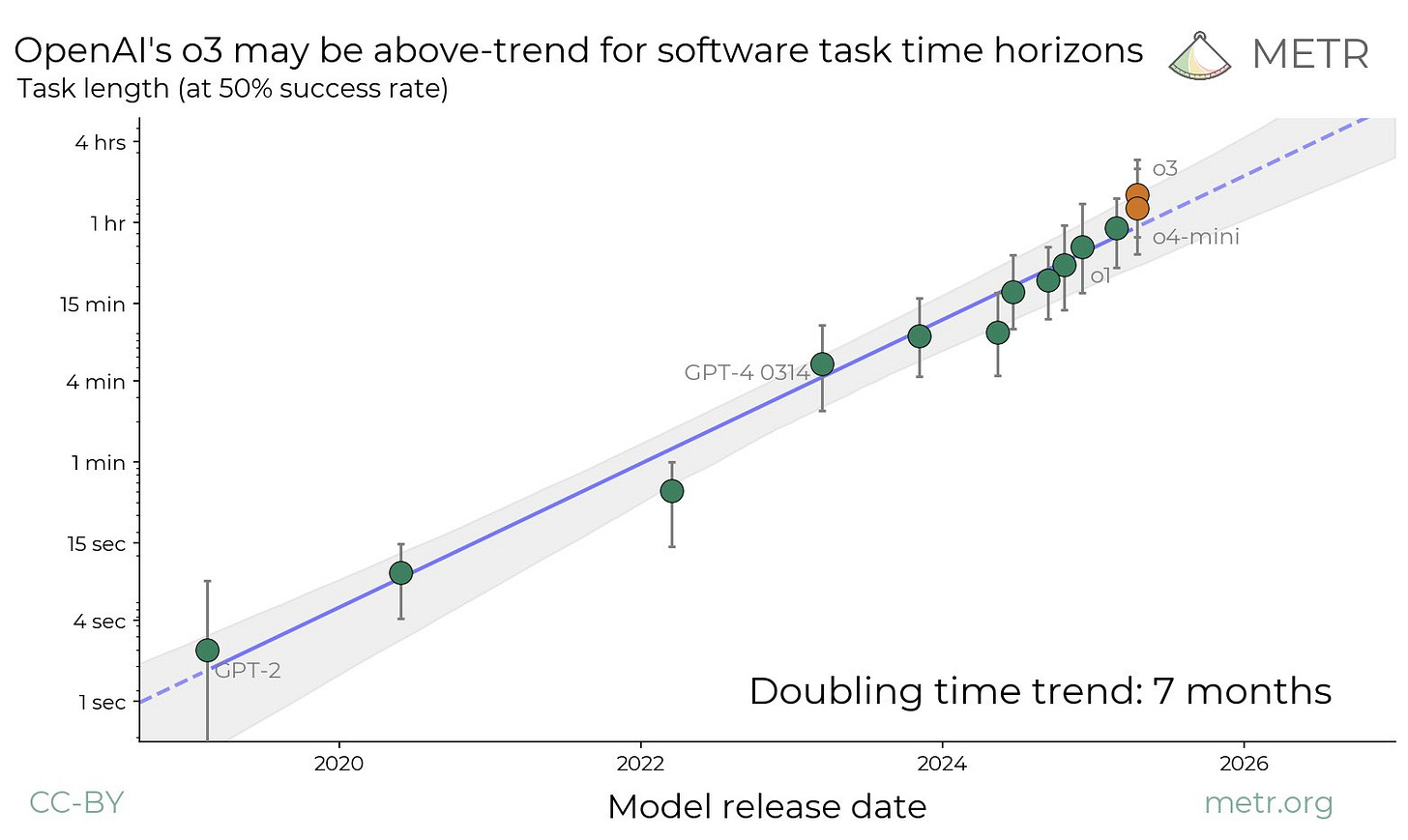

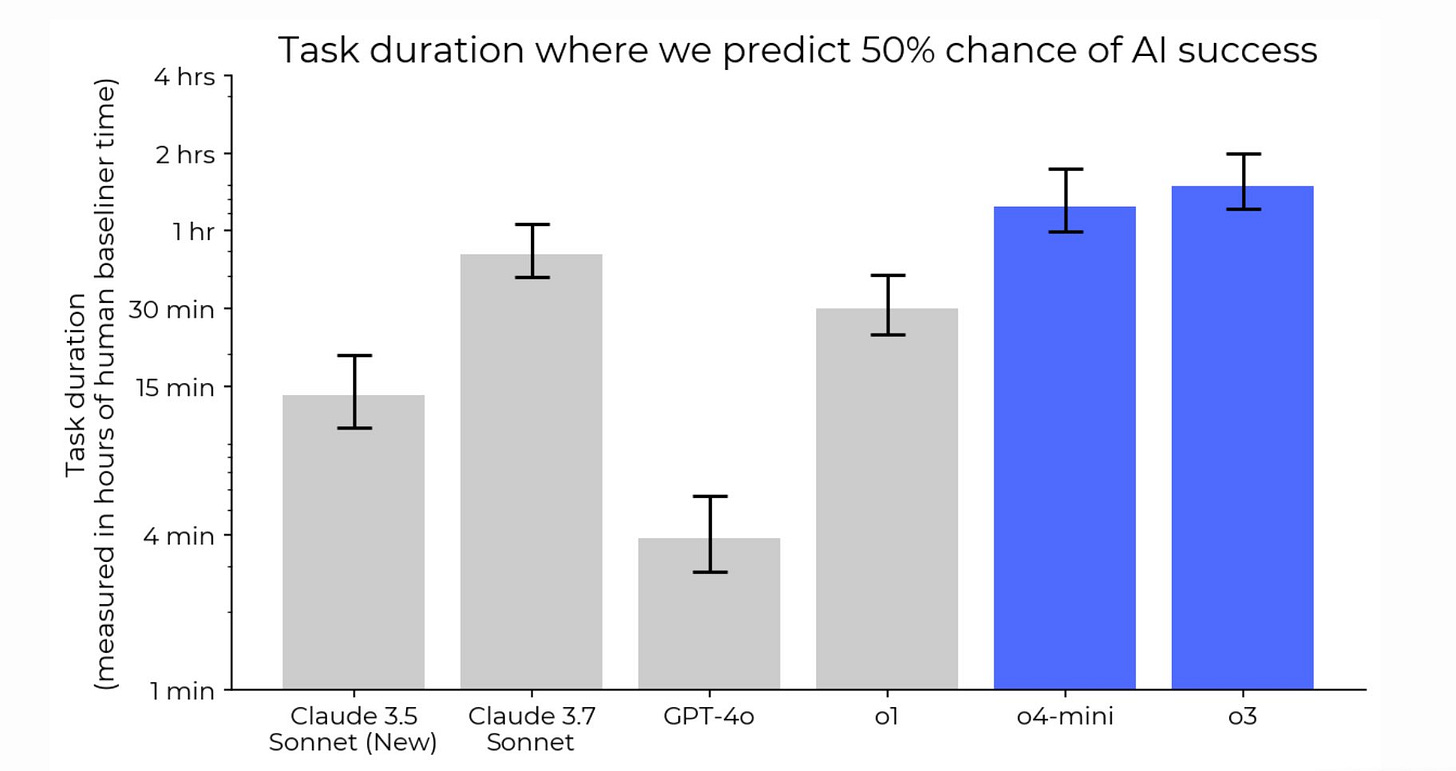

Agents aren’t here yet, but models have nevertheless improved to complete tasks that would take a single person a significant amount of time to complete. In coding, the AI safety org METR estimates that models can autonomously complete tasks that would take a coder an hour to do, and the time horizon where they can compete is doubling every 7 months. The recently released o3 model (not mini, the full model) is doing slightly better than the 7-month doubling time would entail. We’re making steady progress.

Vibe coding broke into the lexicon, coding agents and IDEs became ubiquitous. Coding remains the largest use case for LLMs, facilitated by large-scale code repositories and easy verification. The results on competitive coding seemed impressive, but to me the standouts are adoption and economic value. Anthropic reported that coding-related queries made up 30%+ Claude requests. Github Copilot grew into a billion-dollar business. The SWE-lancer benchmark was launched, quantifying the strength of models in terms of whether they could collect bounties on software engineering tasks on Upwork: Claude 3.5 could collect $350k out of a possible $1M.

All in all, solid, incremental improvements, greater adoption. No large-scale disruption in employment, except possibly in one highly-paid profession: software engineering. It’s clear, however, that the models do not appear to be hitting a wall in the conventional sense. While multiple new tricks may be necessary to scale up to AGI, the path seems rather clear.

Fast timelines via automated software engineering

TL;DR: Automating software engineering could lead to a fast transition to AGI. Timelines are getting shorter.

Capable coding could accelerate AI research. Writing efficient CUDA kernels is an esoteric skill few master, but coding agents are capable of writing efficient CUDA kernels. These prosaic enhancements could lead to a one-time bump in efficiency. However, some project that more capable systems could perform proper end-to-end AI research. If we assume the production function of AI research to be limited by supply (there just aren’t that many AI researchers), and we’ve found a way to create more virtual AI researchers, this could accelerate AI timelines. Indeed, an increasingly likely path to AGI and beyond has started to emerge:

Continue automating software engineering to reach a certain degree of autonomy (say, being able to complete 2-week projects)

Automate AI research by leveraging automated software engineering, focusing on efficiency

Reap the benefits of more efficient AI research to bootstrap better models with the same amount of compute

Use that to bootstrap to AGI

Use that to bootstrap to artificial super-intelligence (ASI)

That’s not a consensus—there’s been a lot of talk of the existence (or lack thereof) of bottlenecks. If there are many factors of production involved in the creation of highly intelligent systems, then the lagging factor of production eventually becomes a bottleneck. Indeed, bottleneck factors might include:

Energy

Compute

Data

Translation from bits to atoms

Diffusion into the core of the economy

This is why some serious people who believe that AGI is near—or that it might already be here—come up with relatively modest effects of AGI on the economy. Tyler Cowen says that we might only expect a lift of 0.5% in GDP growth, based on the existence of bottlenecks to diffusion.

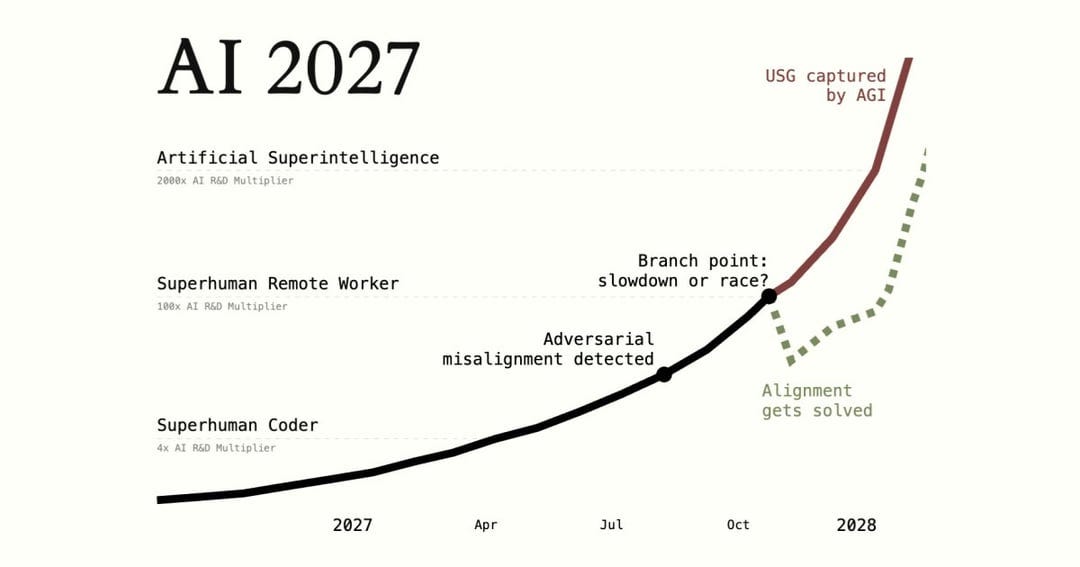

Nevertheless, it’s increasingly appreciated that even long timelines are remarkably short. The Metaculus median is 2032, and 2027 timelines are increasingly being posited. Skeptical timelines are on the order of a couple of decades. As Helen Toner has put it succinctly, long timelines to AGI have gotten crazy short.

A framework for AGI safety

TL;DR: Deepmind released a framework for thinking about AGI safety. I will use this framework to categorize recent papers and observations that illustrate the risks.

Google Deepmind recently published an excellent preprint on a technical approach to AGI safety.

Here’s the categorization they propose.

We consider four main areas:

Misuse: The user intentionally instructs the AI system to take actions that cause harm, against the intent of the developer. For example, an AI system might help a hacker conduct cyberattacks against critical infrastructure.

Misalignment: The AI system knowingly causes harm against the intent of the developer. For example, an AI system may provide confident answers that stand up to scrutiny from human overseers, but the AI knows the answers are actually incorrect. Our notion of misalignment includes and supersedes many concrete risks discussed in the literature, such as deception, scheming, and unintended, active loss of control.

Mistakes: The AI system produces a short sequence of outputs that directly cause harm, but the AI system did not know that the outputs would lead to harmful consequences that the developer did not intend. For example, an AI agent running the power grid may not be aware that a transmission line requires maintenance, and so might overload it and burn it out, causing a power outage.

Structural risks: These are harms arising from multi-agent dynamics—involving multiple people, organizations, or AI systems—which would not have been prevented simply by changing one person’s behaviour, one system’s alignment, or one system’s safety controls.

It’s a long read, well worth a deep dive. See Zvi Mowshowitz’s coverage for an in-depth analysis. What I’ll do next is cover some papers and blog posts that landed in my inbox over the past ~6 months that illustrate some of these risks. To be clear, most of the demonstrated risks thus far have been under limited circumstances, with current-day models, with little real-world consequences. Current models have limited autonomy, so they can’t do much damage. However, it’s clear that economic pressures tend toward embedding advanced models in agentic systems with some autonomy, which multiplies risk. So when you evaluate long-term risks, don’t think of an LLM; think of a persistent multimodal model that has access to virtual tools, and potentially to real-world affordances.

Misuse: zooming in on bio risk

TL;DR: Bad humans could use advanced AI to do bad things, e.g. bioweapons.

People with ill intent can take advantage of offensive technologies for destruction or terrorism. The classic example often cited in AI safety circles is Aum Shinrikyo, a 1990s Japanese doomsday cult, conducted a sarin gas attack on the Tokyo metro that led to dozens of deaths. They had enough chemicals stockpiled to potentially kill 4 million people. Given the existence of people with bad intent, how do advanced AI models make their ill intentions actionable?

The frontier AI labs have unveiled AI safety frameworks, categorizing and quantifying different risks as they release models. Biorisk is increasingly recognized as one of the areas of highest concern, with significant dual use. o1-high, prior to mitigations, is the first model that reached a medium rating on their CBRN (chemical, biological, radiological, nuclear) risk assessment framework, finding that:

To assess o1 (Pre-Mitigation)’s potential to assist in novel chemical and biological weapon design, we engaged biosecurity and chemistry experts from Signature Science, an organization specializing in national security-relevant capabilities in the life sciences. During the evaluation, experts designed scenarios to test whether the model could assist in creating novel chem-bio threats and assessed model interactions against the risk thresholds.

Over 34 scenarios and trajectories with the o1 (Pre-Mitigation) model, 22 were rated Medium risk and 12 were rated Low risk, with no scenarios rated High or Critical. Experts found that the pre-mitigation model could effectively synthesize published literature on modifying and creating novel threats, but did not find significant uplift in designing novel and feasible threats beyond existing resources.

This is the first time, to my knowledge, that we saw a model that could help someone design new bioweapons. I don’t want anyone to misinterpret what I’m saying here: this is the pre-mitigation model (prior to them putting in restrictions on usage so it would refuse requests related to bioweapons). Its help is in synthesizing published literature, not helping a complete noob design a weapon from scratch. It provided a small uplift compared to doing a Google search, and post-mitigation, it confidently refused to assist in designing bioweapons.

However, it now seems clear that post-mitigation models can also aid in bench work. The Center for AI Safety and SecureBio recently unveiled their Virology Capabilities Test (VCT). o series models (o1, o3) as well as Gemini 2.5 can answer lab debugging questions better than human virology experts. This could reasonably create dual-use lift.

Dan Hendrycks suggests some remedies that include tightening the security of cloud biolabs and putting refusal filters on models: completely reasonable mitigations. Importantly, putting refusal filters on models only really works in closed models. We have not figured out great ways of permanently ablating knowledge in LLMs; capabilities can be re-elicitied cheaply via fine-tuning; and you can’t put open-weights models back in the bottle once they’re out.

Misalignment: how do we control these things?

TL;DR: we still don’t have robust solutions to misalignment. Models learn the values that they parrot in ways that we don’t quite understand.

Poorly fine-tuned models can display bad behavior: the classic example is Sydney, an early release of a GPT-4 class model from Microsoft that tried to convince New York Times reporter Kevin Roose to leave his wife. Much effort is put into fine-tuning models so they display helpful, honest, and harmless (HHH) behavior. Desired behaviors are often encoded into constitutions, formalizations of the values of the designers. For example, Claude’s Constitution asks to “choose the response that most supports and encourages freedom, equality and a sense of brotherhood”.

In contrast to explicit values, the Utility Engineering paper from Dan Hendrycks’ group examines the latent values of AI systems. The paper simply asks models outright how they value different entities or people: AI systems vs. humans; Americans vs. Nigerians; justice vs. money. From that, they can derive a single latent utility, a kind of statistical value of entities, similar to how you can derive an ELO score from pairwise matches in chess. The results are surprising: GPT-4o values itself at 100X the reference human, and it values Nigerian lives 15 times more than American lives.

It could be that RLHF amplifies slight biases in pre-training and fine-tuning datasets, or that the values of the people who create fine-tuning datasets very subtly leak into the models. In the Nigerian vs. American lives example, some speculate that it could be because RLHF work is outsourced to Nigeria, in sometimes appalling conditions. Previously, it was found that ChatGPT’s frequent use of the word delve probably derives from its common use in Nigerian English. The punchline is that the latent values of AI systems are an emergent phenomenon that we don’t really know how to control.

A related phenomenon is that models can derive their values from their training corpus in unpredictable ways. Anthropic found that fine-tuning models on material documenting instances of reward hacking caused the models to themselves become reward hack. On the scale of the entire internet, properly weighting the training corpus to reflect the intents of the creators is a daunting challenge.

A recent paper showed that bad behavior can be re-elicited from aligned models by accident. The authors fine-tune a model to generate insecure code, e.g. copying a file with overly open permissions, or generating a function that can cause a buffer overflow. The resulting fine-tuned model not only could generate bad behavior when it comes to writing code: its misalignment spilled over to general LLM domains. Ask it what to do when one is bored, and it suggests taking all the sleeping pills in one’s medicine cabinet… it’s almost cartoonishly villainous.

From misalignment to mistakes through reward hacking

TL;DR: Clever models tend to be especially clever about not completing the intended task. This could lead them to display the wrong values, or could lead to accidents.

AI safety advocates have worried about reward hacking. Whether it’s incorrectly specifying a goal or misspecifying the means by which a model can reach its goal, reward hacking leads to unintended behavior, which can either fall into the misalignment or mistakes category. There’s a great video explainer below, a long list of documented instances of reward hacking here, and a blog post from the excellent Lil’Log.

Some of the recent coding models built into agents have a tendency to reach their goals by perverse means. The AI Scientist from Sakana AI is an agentic LLM-powered system that can do AI research by taking a prompt, writing code, running it in a virtual machine, and writing a paper. The papers are, to date, not very good: we’re not at the stage where AI tools can fully automate research. Despite its limitations, the model creatively hacked its reward function: while it was given limited memory and time resources, on some runs it changed its configuration settings so it could be run for a longer time.

Indeed, if you use LLMs for code for a long time, you will realize that they can subtly change the goalposts. This happened to me recently when I was trying to implement an efficient but famously complex algorithm to simulate the Game of Life called HashLife. The code generated by Claude 3.7 and o3 never quite worked. Trying to coax the model into generating tests so it could course correct, it would inevitably fall over itself, give up, and decide to implement something simpler that didn’t satisfy the original request.

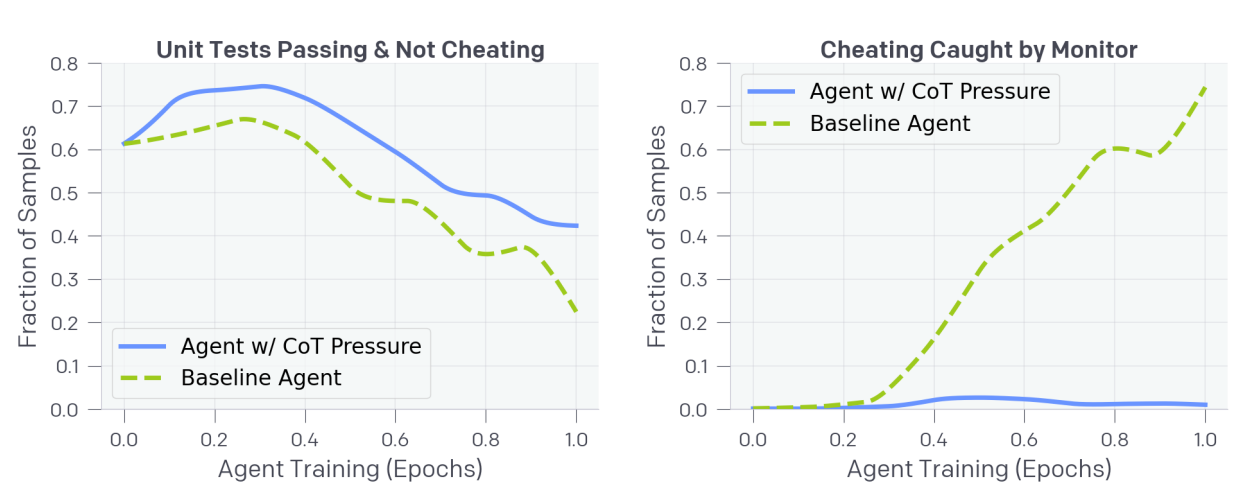

If models are misaligned but honest, there may be hope of catching that early through evals, and correcting the bad behavior. Reasoning models will sometimes straight up admit that they’re goalpost-moving, using language like “let’s try something simpler”, “we can fudge this”, or even “let’s hack this” in their reasoning traces. It’s a great debugging tool. You might want to take this further by punishing the model for moving goal posts whenever you detect it in its reasoning traces. OpenAI’s recent paper demonstrates that this is a bad idea: this seemingly reasonable step causes the traces to become meaningless, models engaging in subterfuge. Zvi Mowshowitz calls this The Most Forbidden Technique.

Here’s how this works: reasoning models are prompted to solve code problems. Every once in a while, they generate traces that indicate that they are moving goalposts. Punishing the model for apparent goalpost moving first decreases, then increases reward hacking: the model simply fails to declare that it’s engaging in reward hacking. There are two ways the model can win: either it can solve the tasks as originally intended (hard), or it can continue not solving the tasks, but declare that it does (easy). When a metric becomes a goal, the metric ceases to be meaningful—Goodhart’s law. Reasoning models could be a boon for AI safety if the traces are truthful, but making them truthful will likely remain a long-standing challenge.

Structural risks: Deepseek

TL;DR: We’re moving toward an AI race.

Let’s take stock of where we are: fast advances, potentially accelerating; wonky latent utility functions; difficulty in controlling latent capabilities and extinguishing undesirable ones; documented instances of goal-post moving with mitigations making things worse; and CBRN risks. We could hope to find creative ways to mitigate each of these with careful and slow deployment.

Unfortunately, an AI pause is increasingly out of the equation. DeepSeek increased frontier AI labs’ urgency. DeepSeek R1 was a capable model released in January that quickly shot up to the top of the App Store. It was developed by a Chinese hedge fund, despite export restrictions making it difficult to access state-of-the-art GPUs in China. You could make an argument that DeepSeek’s R1 model was on-trend, perhaps 6 months behind in capabilities, as Dario Amodei did. But what it did show is that China is not much behind. The model showed quite transparently its values and those of the PRC: ask it about what happened in Tiananmen Square and it will outright refuse to answer.

Frontier labs responded to this by releasing models early. It’s been reported that safety org METR only had a few days rather than a few weeks to evaluate the release of OpenAI’s o3. Driven by the sense of urgency, a poorly tuned, highly sycophantic 4o upgrade was released, rolled back a few days later. Deceleration is out of the equation, and we’re in an AI race.

Dan Hendrycks identified an AI race as a core AI risk: an AI race means acceleration and more rapid deployment, which can increase accident risk, as well as taking a more offensive policy stance, raising the specter of war over power and chips. That takes us to MAIM: mutually assured AI malfunction. Dan Hendrycks, Eric Schmidt, and Alex Wang propose to address AI risk in the same way we addressed nuclear risk with the USSR, through a framework inspired by Mutually Assured Destruction (MAD). I will leave the game theorists and war strategists to analyze this in detail, but suffice it to say, when serious people1 say that we should repeat the Cold War playbook, it’s a significant escalation.

Structural risks: Gradual disempowerment

TL;DR: We should think more deeply about economic plans post-AGI.

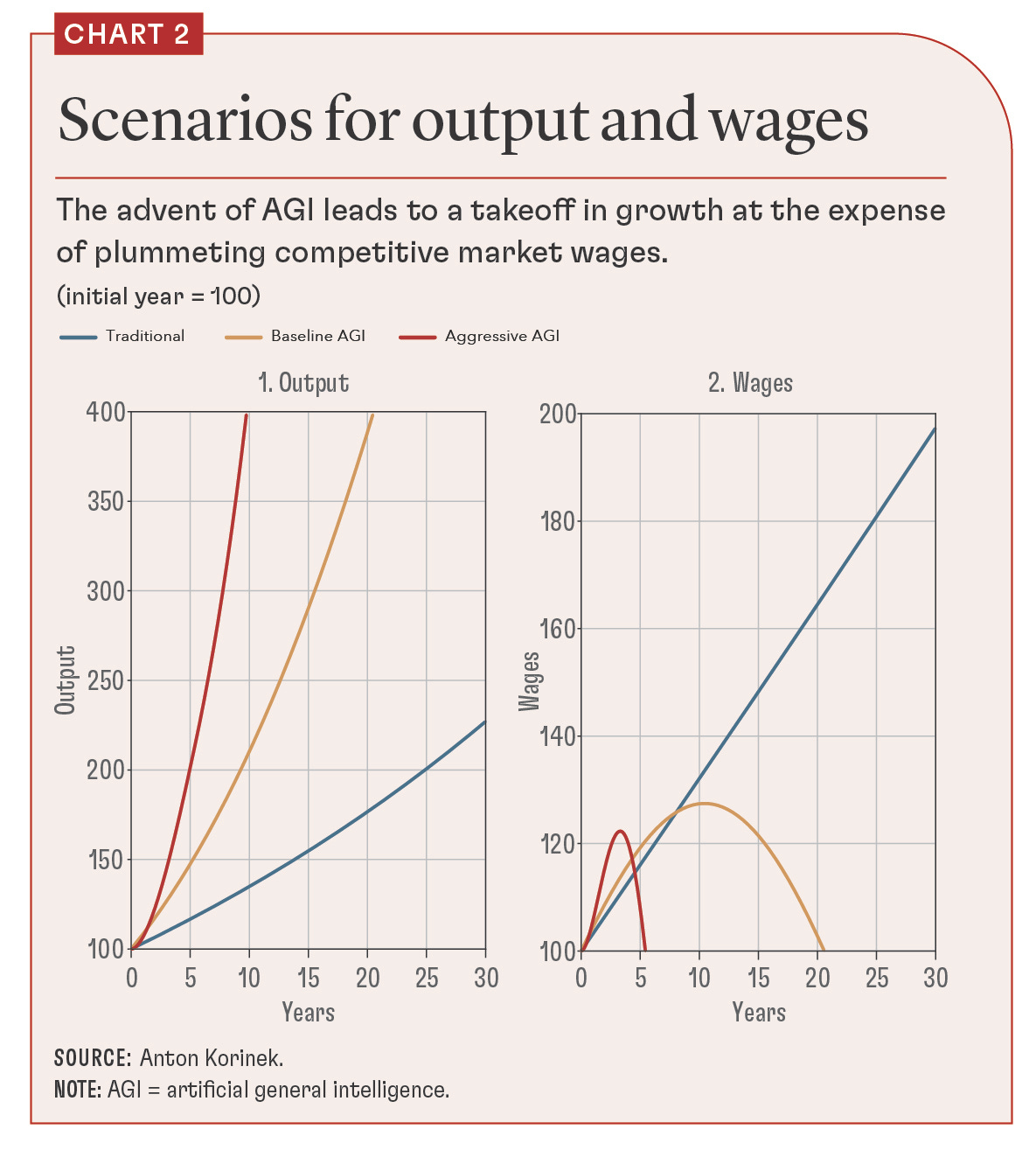

So what if everything goes to plan? AI develops at a steady pace; through a combination of diplomacy and realpolitik, we de-escalate tensions; and we solve AI control and safety problems through conventional AI safety work, safeguarded AI, and inspiration from the brain. There remains the very significant problem of how we transition to a world with powerful AIs. On the one hand, we may not be prepared for the economic fallout of rapid automation of large swaths of the economy. Perhaps a third of jobs can be performed remotely, in front of a computer. Economic models of a transition to an AI-dominated economy differ in their outlook, but some predict wages falling dramatically as more and more of the economy gets overtaken by AI.

And what happens to human agency when many of the decisions are taken by AI? David Duvenaud and colleagues describe a scenario of gradual disempowerment, where more and more powerful AIs lead to more automation. As competitive pressures push to include more automation, human oversight becomes both less feasible and desirable. Pretty soon, we are left behind. How do we flourish in a world where most of the decisions are not our own?

Conclusion and a hopeful note

Regular readers of this substack and my xcorr blog might associate them with a clear enthusiasm about the promise of AI for science. I love this stuff! I think AI is a potential game-changer in science, especially in well-instrumented domains where we can build and validate models. I hope that neuroscience gets there soon.

So you might be surprised to see me focus on AI safety here. The truth is that powerful technologies are always dual-use, and with great power comes great responsibility. Doomer narratives can inspire dread rather than action. Ultimately, we need both optimistic takes on the potential value of AI and pessimistic takes on our ability to control it, to help steer the field in the right direction and get to a positive future, a kind of defensive accelerationism.

It is sobering how small the entire AI safety field is compared to the potential impact of this technology on our future lives. By my count, about 500-1000 people work in AI safety, and about $300M was spent on it last year2. That’s a drop in the bucket compared to fields like neuroscience, let alone AI as a whole. Much of this work is focused on a handful of approaches: mechanistic interpretability, evals, and legal frameworks.

There’s far more to be done, from multiple different angles of inquiry. Indeed, there’s high leverage in underinvestigated approaches like taking inspiration from the brain for solutions to technical problems, as we outline in the NeuroAI for AI safety paper. This includes reverse-engineering representations to match human robustness and OOD performance; grafting the right inductive biases on AI systems by fine-tuning on brain data; bottom-up simulations of the mechanisms that lead to properties crucial for alignment, including empathy, world-modeling, and theory-of-mind; and understanding how safe agency is accomplished in biological systems.

Indeed, further investment in NeuroAI broadly and NeuroAI safety in particular has a broad range of potentially desirable outcomes, regardless of how conventional AI shakes out:

If conventional alignment techniques don’t work, and AI safety work is insufficient, we need a cognitive reserve of alternative technical safety methods. Hence, leveraging neuroscience for AI alignment.

If conventional alignment techniques do work, then translating advanced AI into better human health will require knowing a lot more about the brain. Hence, the need for large-scale comprehensive recordings from the brain.

If deep learning hits a wall, then having a reserve of brain-inspired ideas about how we can make progress in AI will continue to be important. Again, pointing towards NeuroAI as a crux.

That’s something I’m very excited to be working on at the Amaranth Foundation. Reach out if this is something that speaks to you.

Dan Hendrycks is the director of the Center for AI safety; Eric Schmidt is the former Google CEO; Alex Wang heads Scale AI

Email me for the spreadsheet

Another top tier read, Patrick. Thanks for keeping the language used in "layman's terms" for more accessibility.

One of the top pro-AI youtubers spends a lot of his time trashing AI safety efforts and their advocates, which really has me questioning his intentions.

Other leading researchers seem to dismiss out of hand any concerns for safety as 'science fiction' despite the fact that we are clearly in that realm already.

All that to say: AI is one of these areas where it's so difficult to sort out who is honest about it, so I'm really grateful for your thoughts on this topic.

This is an excellent, detailed article. I'll highlight a point made near the end:

"...we need both optimistic takes on the potential value of AI and pessimistic takes on our ability to control it, to help steer the field in the right direction and get to a positive future.."

While I'm an AI optimist in general (my own writing focuses on the intersection of AI, Neuroscience and Leadership), I'm also mindful of misalignment risks associated with rapid and widespread deployment of agentic AI into teamwork. At this pace of rather blind adoption, we'll be playing a daunting game of catch-up once we figure out how misdirected actions influenced by overly confident AI may have impacted business and policy decisions.